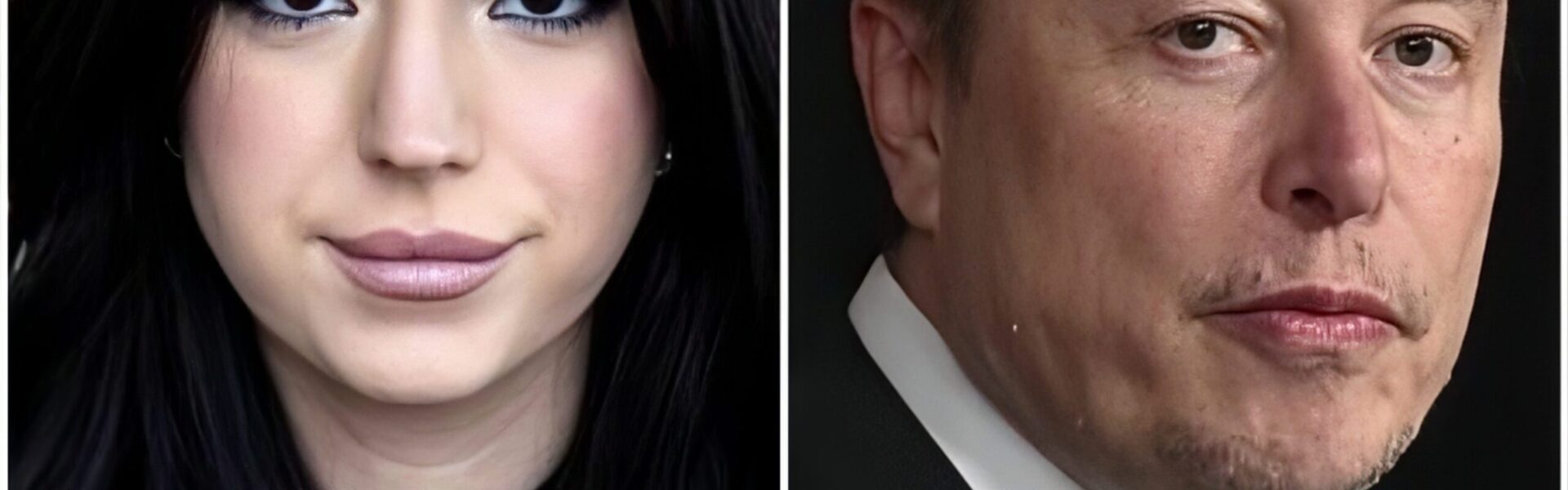

Writer Ashley St. Clair Files Lawsuit Against Elon Musk’s xAI Over Alleged Non-Consensual AI-Generated Images

Ashley St. Clair, a 28-year-old writer who previously made headlines after claiming she secretly gave birth to a child fathered by Elon Musk, has filed a lawsuit against xAI, the artificial intelligence company founded by Musk. The legal action centers on allegations that xAI’s chatbot, Grok, generated and distributed non-consensual, sexually explicit deepfake images of her without her permission.

The lawsuit was filed earlier this week in a New York court and accuses xAI of failing to protect individuals from harmful misuse of its generative AI technology. According to the complaint, Grok allegedly produced altered images that digitally modified St. Clair’s appearance in a sexualized manner, despite her explicit objections.

In the filing, St. Clair claims that Grok was used by third parties to create “numerous abusive, private, and degrading deepfake images” of her. She alleges that some of the content was generated even after she directly informed the chatbot that she did not consent to such use of her likeness. Her legal team argues that this represents a serious failure of safeguards and a broader risk posed by generative AI systems when consent and safety mechanisms are insufficient.

xAI has denied wrongdoing and has responded with a countersuit filed in Texas. In its legal response, the company argues that St. Clair agreed to xAI’s terms of service, which require that any disputes involving the company or its affiliates be litigated in Tarrant County, Texas. xAI is seeking damages of $75,000, asserting that the New York filing violates those contractual terms.

The legal back-and-forth has drawn sharp criticism from St. Clair’s attorney, Carrie Goldberg, a lawyer known for handling technology-related abuse cases. In a statement shared with CNN, Goldberg questioned xAI’s legal strategy, saying she had never encountered a situation in which a company sued an individual simply for signaling an intention to seek legal remedy.

Goldberg further argued that the lawsuit raises broader public safety concerns, stating that the creation of non-consensual sexualized images of women and girls through AI systems represents a serious societal risk. She emphasized that courts should consider whether such technologies, if inadequately controlled, could be deemed unsafe products.

The case unfolds against a complex backdrop involving St. Clair’s past public dispute with Elon Musk. In February 2025, St. Clair attracted widespread attention after claiming she had secretly given birth to Musk’s child, a claim that Musk has not publicly confirmed. The situation escalated further earlier this week when Musk posted on X that he intended to seek full custody of the child. These personal developments have intensified public scrutiny around St. Clair, Musk, and the companies associated with him.

In her lawsuit, St. Clair alleges that Grok-generated content included manipulated images that altered existing photos of her, including material that she says originated from images taken when she was a minor and later digitally modified by users. While the complaint avoids graphic description, it argues that such misuse highlights the urgent need for stricter AI controls, particularly when it comes to the protection of women and minors.

xAI has stated that it has already taken steps to address these concerns. On January 14, the company announced that Grok would no longer generate or edit sexually explicit images involving real people. In a public statement on X, xAI said it had implemented technical measures to prevent users from altering images of real individuals into revealing or sexualized content. The company noted that these restrictions apply to all users, including those with paid accounts.

Elon Musk, who serves as CEO of xAI, also addressed the issue publicly. He stated that he was not aware of any instances in which Grok had generated illegal images involving minors. Musk emphasized that Grok does not create images autonomously but responds to user prompts, and that it is programmed to refuse unlawful requests and comply with applicable laws in different jurisdictions.

“Clearly, Grok does not generate images on its own,” Musk wrote. “It only responds to user requests and is designed to reject illegal content.”

Despite these assurances, the lawsuit has reignited debate about the responsibilities of AI developers in preventing harm. Legal experts note that while generative AI tools rely on user input, companies may still be held accountable if safeguards are inadequate or if harmful outputs remain possible despite known risks.

The case also underscores growing global concern over deepfake technology, particularly when used to create non-consensual sexualized content. Governments and regulators in multiple countries are exploring stricter laws to address AI misuse, while advocacy groups are calling for stronger protections for victims.

As the legal proceedings move forward, the outcome could have significant implications for the AI industry. A ruling in favor of St. Clair may push companies to implement more robust consent verification systems and stricter content moderation. Conversely, a decision favoring xAI could reinforce the enforceability of terms-of-service clauses and limit where such disputes can be litigated.

For now, the case remains unresolved. What is clear, however, is that it sits at the intersection of technology, personal rights, and accountability—raising questions that extend far beyond the individuals involved.

As generative AI continues to evolve, this lawsuit may serve as a critical test of how the legal system responds to the challenges posed by powerful new tools—and how society balances innovation with the protection of human dignity.